This note is based on Coursera course by Andrew ng.

(It is just study note for me. It could be copied or awkward sometimes for sentence anything, because i am not native. But, i want to learn Deep Learning on English. So, everything will be bettter and better :))

By now, we have actually seen most of the ideas we need to implement a deep neural network. Forward propagation, back propagation with a singel hidden layer, as well as logistic regression, and vectorization, random initialization. So, we are going to take those ideas and put them together so that we will be able to implement our own deep nueral network.

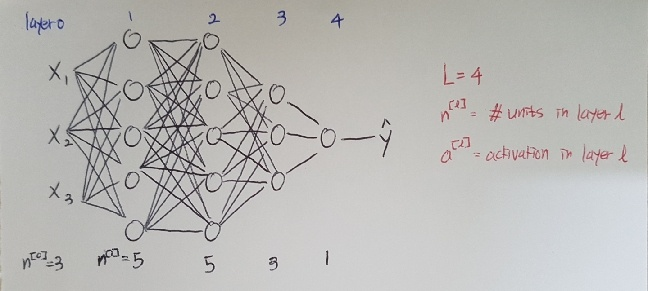

So, what is a deep nueral network? We say that logistic regression is a very "shallow" model, whereas this 5 hidden layers model is a much deeper model, and shallow versus depth is a matter of degree.

Let's now go through the notation we used to describe deep neural networks.

'Software Courses > Neural network and Deep learning' 카테고리의 다른 글

| [Neural Network and Deep Learning] Why deep representations? (0) | 2020.03.24 |

|---|---|

| [Neural Network and Deep Learning] Forward Propagation in a Deep Network (0) | 2020.03.23 |

| [Neural Network and Deep Learning] Random Initialization (0) | 2020.03.19 |

| [Neural Network and Deep Learning] Derivatives of activation functions (0) | 2020.03.18 |

| [Neural Network and Deep Learning] Activation functions (0) | 2020.03.18 |