This note is based on Coursera course by Andrew ng.

(It is just study note for me. It could be copied or awkward sometimes for sentence anything, because i am not native. But, i want to learn Deep Learning on English. So, everything will be bettter and better :))

INTRO

MAIN

WHAT

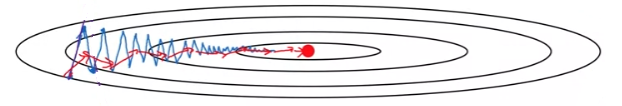

If we take steps of gradient descent, it slowly oscillate toward the minimum. This up and down oscillation slows down gradient descent and prevents us from using a much larger learning rate.

So, the vertical axis we want our learning to be slower. But on the horizontal axis we want faster learning. In the vertical direction where we want to slow down, this will average out opsitive(up) and negative(down) numbers. So, the average will be close to zero. Whereas, on the horizontal direction, all the derivatives are pointing to the right of the horizontal direction. So, the average in the horizontal direction will still be pretty big. If we implement gradient descent with momentum, it is like this:

HOW

Here is the algorithm and we have two hyperparameters, which are learning rate α and β controls exponentially weighted average.

CONCLUSION