This note is based on Coursera course by Andrew ng.

(It is just study note for me. It could be copied or awkward sometimes for sentence anything, because i am not native. But, i want to learn Deep Learning on English. So, everything will be bettter and better :))

INTRO

There are a few optimization algorithms that are faster than gradient descent. In order to understand those algorithms, we need to be able to use exponentially weighted averages.

MAIN

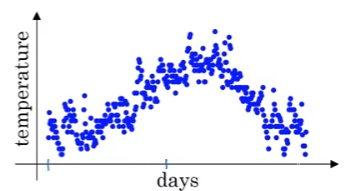

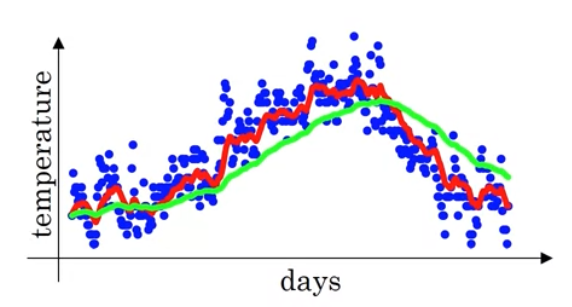

If we want to the trends, the local average or a moving average of the temperature, here is waht we can do.

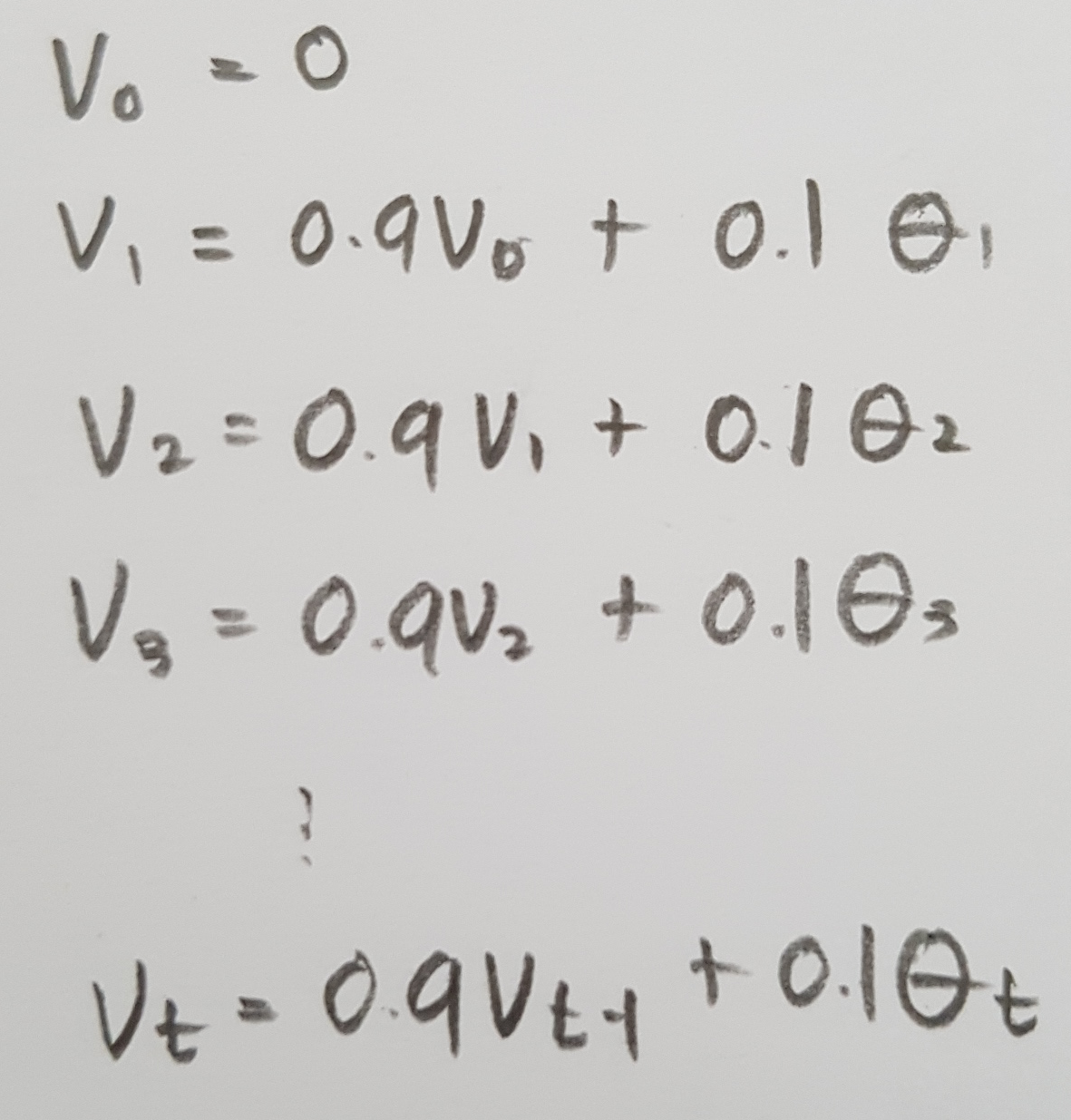

Initialize V0 = 0. And we are going to average it, 0.9*V0 + 0.1*θ1(the temperature of first day), and so on.

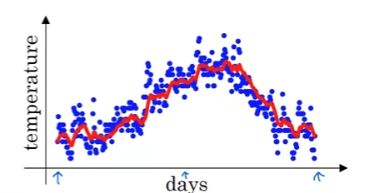

So, if we compute this and plot it in red, we will get this.

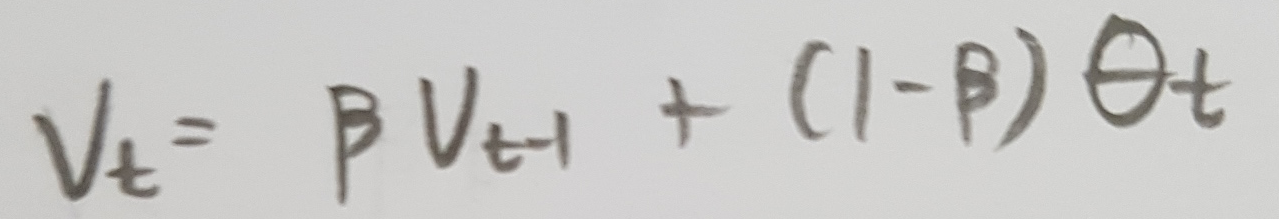

So, Vt is :

And Vt is averaging over days' temperature. If β = 0.98 and plot, we will get green line.

With the high value of β, the plot is much smoother because of averaging over more days of temperature.

CONCLUSION