This note is based on Coursera course by Andrew ng.

(It is just study note for me. It could be copied or awkward sometimes for sentence anything, because i am not native. But, i want to learn Deep Learning on English. So, everything will be bettter and better :))

INTRO

If we suspect our neural network is overfitting our data, that is we have a high variance problem. One of the first things to try is regularization. The other way to address high variance is to get more training data. That is also quite reliable. However, we cannot have always get more training data. But adding regularization will often help to prevent overfitting, or to reduce the errors in our network.

MAIN

L1 and L2

Let's develop these ideas using logistic regression. Recap the logistic regression we try to minimize the cost function J. And to add regularization to the logistic regression, lambda/2m * norm of w squared.

Why do we regularize just the parameter w? Why don't we add something there about b as well? In practice, we could do this. But Andrew just omit because if we look at w it is usually a pretty high dimensional parameter vector, whereas b is just sigle number. So almost all the parameters are in w rather than b. So it won't make big difference.

If we use L1 regularization, then w will end up being sparse. It means that the w vector will have a lot of zeros in it.

How about a neural network? In a neural network, we have a cost function that is a function of all of our parameters. So the cost function is the sum of the losses summed over our m training examples. And add lambda/2m * sum over all of parameters the norm of w squared.

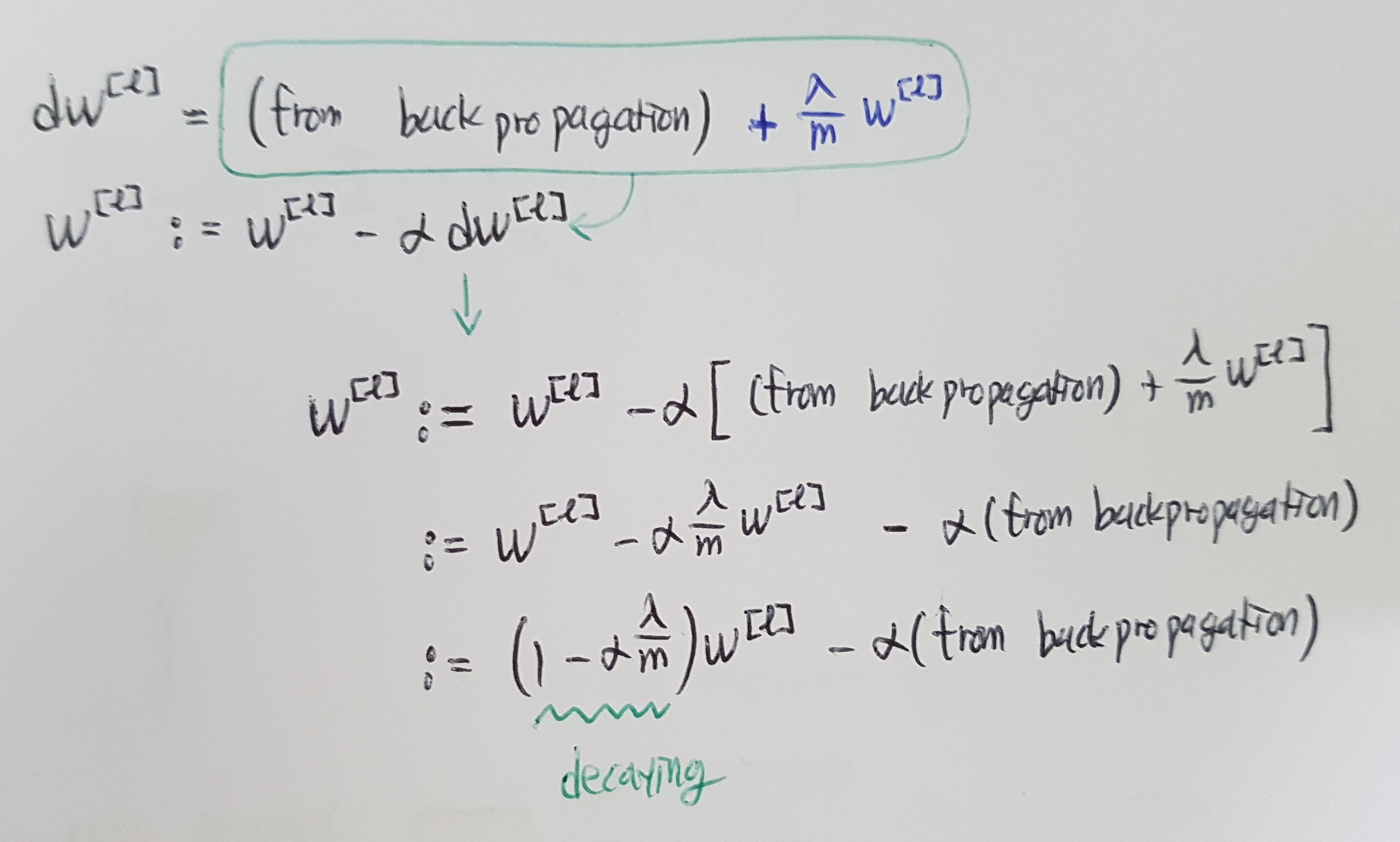

So how do we implement gradient descent with this? previously, we would complete dw using backprop, where backprop would give us the partial derivative of J respect to w. And then we update w[l]. Now that we have added this regularization term to the objective. we take dw and add lambda/m * w to it. And then we just compute this update same as before.

And it's the reason that L2 regularization is sometimes also called "weight decay". If we unroll the update, the term, 1 - alpha * lambda/m, shows that whatever the matrix w[l] is, we are going to make it a little bit smaller.

Why does regularization help with reducing variacnce problem?

If we crank regularization lambda to be really big, they will really incentivized to set the weight matirces W to be reasonably close to zero. Then this simplified neural network becomes a much smaller neural network. It is like a logistic regression unit, but stacked most as deep.

Here is another attempts at additional intuition. We are going to use Tanh function. If z takes on only a smallish range of parameters, then we are just using the linear regime of the tanh function. If z is allowed to wander up to larger values or smaller values, the activiation function starts to become less linear. So if the lambda is large, then we have that the W parameters will be relatively small, then z will also relatively small. And in particular, if z ends up taking relatively small values, then g(z) will be roughly linear. It could be every layer will be roughly linear, as we already have studied before. So, even if very deep network with a linear activation function, it is at the end able to compute a linear function.

Dropout

Dropout is another powerful regularization. With dropout, what we are going to do is go though each of the layers of network and set some probability of eliminating a node in neural network. We have a 0.5 chance of keeping each node and 0.5 chance of removing each node. And some nodes are eliminated. So, we end up with a much smaller, really much diminished network. And then we do back propagation training.

How do we implement the dropout? We will see the 'Inverted dropout' technieque.

Let's say we want to illustrate this with layer l=3. And we set a vector d3, which is going to be the dropout vector for the layer 3. The keep_prob will keep a given hidden unit by the probability. It means that there is 0.2 chance of eliminating any hidden unit. If we do this on Python, d3 will be a boolean array where value is true and false.

And then, we take our activations from the thrid layer, a3. There is a 20% chance of each of the elements being zero. This multiply operation ends up zeroing out the corresponding elements of d3.

We are going to take a3 and scale it up by dividing by keep_prob. It could be weird why we should divide it by keep_prob? Let's say for the sake of argument that we have 50 units in the third hidden layer. If we have 0.8 keep_prob, we end up with 10 units zeroed out. And if we look at the value of z[4], a[3] will be reduced by 20%. By which it means that 20% of the elements of a3 will be zeroed out. In order to not reduce the expected value of z[4], we need to divide a[3] by keep_prop because this will correct the reduced a[3] back up by roughly 20% that we need. So, it's not changed the expected value of a[3].

We will zero out different hidden units for difference training examples. If we make multiple passes through the same training set, we should randonly zero out different hidden units.

But we don't use dropout explicitly. Because when we are making predictions at the test time, we don't really want out output to be random. If we are impleneting dropout at test time, that add noise to our predictions.

How does it work?

1. If on every iteration we are working with a smaller neural network, it seems like have a regularizing effect.

2. Output unit cannot rely on any one feature because any one feature could go away at random. We are reluctant to put too much weight on any one input because it can go anyway. So, the output unit will be motivated to spread out the way. And by spreading all the weights, this will tend to have an effect of shrinking the squared norm of the weights. It is similar to L2 regularization.

Other regularization methods

1. Data augmentation

If we are overfitting, getting more training data can help. But getting more data can be expensive or sometimes cannot get more data. What we can do is augment our training set by taking image like flipping it horizontally or taking random crops of image, rotating image.

2. Early stopping

We plot training error or cost function J. And we also plot our dev set error. Dev set will usually go down for a while and then it will increase from there. And we find an iteration to stop training on out neural network halfway.

Why does it work? When we haven't run many iteration yet, our parameters W will be close to zero (althought we initialize W, it is very small). And W will be bigger and bigger. So, in the middle of iteration, we will have middle size of W. It is similar to L2 by picking a neural network with smaller norm for parameters W.

But early stopping has one downside. Machine learning process has a comprising several different steps. One is to build algorithm to optimize the cost function J. We have various tools to do that, such as gradient descent, momnetum, RMS prop, Adam, and so on. Second is not to overfitting. We have tools, such as regularization, getting more data. These two steps are completely seperate task. And the downside of early stopping is that this couples these two tasks. We no longer can work on these two steps independently. Because by stopping gradient descent early, we are sort of breaking optimizing cost function J. Then, we are not doing a great job reducing the cost function J. So, normally we use L2 alternatively.

CONCLUSION

Awesome!