This note is based on Coursera course by Andrew ng.

(It is just study note for me. It could be copied or awkward sometimes for sentence anything, because i am not native. But, i want to learn Deep Learning on English. So, everything will be bettter and better :))

INTRO

When training a neural network, one of the techniques that will spead up training is normalizing inputs.

MAIN

Why normalize inputs?

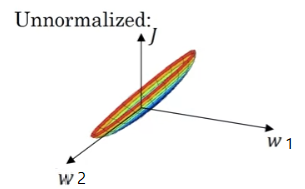

If we use unnormalized input features, it's more likely that cost function will look like very squished out bowl. It is elongated cost function.

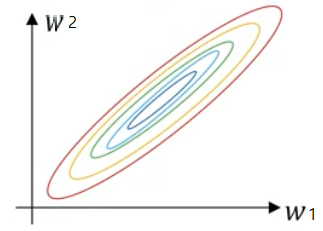

If features are on different scales, the ratio or the range of values for parameters will end up taking on different values. For example, if the parameters W range like W1: 1~1000, W2: 0~1, then cost function can be a elongated bowl. So, if we plot the contours of this function, we can have a elongated function like that.

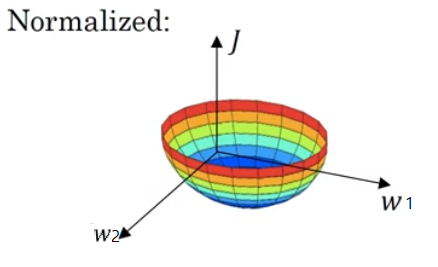

Whereas if we normalize the features, then cost function will on average look more symmetric.

If we are running gradient descent on the cost function like the one on left, then we might have to use a very small learning rate because this gradient descent might need a lot of steps to oscillate back and forth. Whereas if we have a more spherical contours, then wherever we start grdient descent can pretty much go straight to the mininum.

How to normalize training sets?

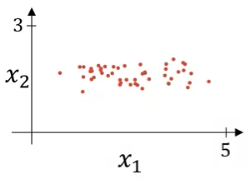

Let's say. We have two input features. Normalizing inputs corresponds to two steps.

1. Subtract out or zero out the mean

It means we just move the training set until it has 0 mean.

2. Normalize the variances.

In this example, X1 has a much larger variance than X2. And do the formula below.

Now the variance of X1 and X2 are both equal to one.

CONCLUSION

If your input features came from very different scales, then it is important to normalize features. Although performing this type of normalization pretty much never does any harm, so Andrew often do it anyway if he is not sure whether or not it will help with speeding up training for his algebra.