반응형

This note is based on Coursera course by Andrew ng.

(It is just study note for me. It could be copied or awkward sometimes for sentence anything, because i am not native. But, i want to learn Deep Learning on English. So, everything will be bettter and better :))

INTRO

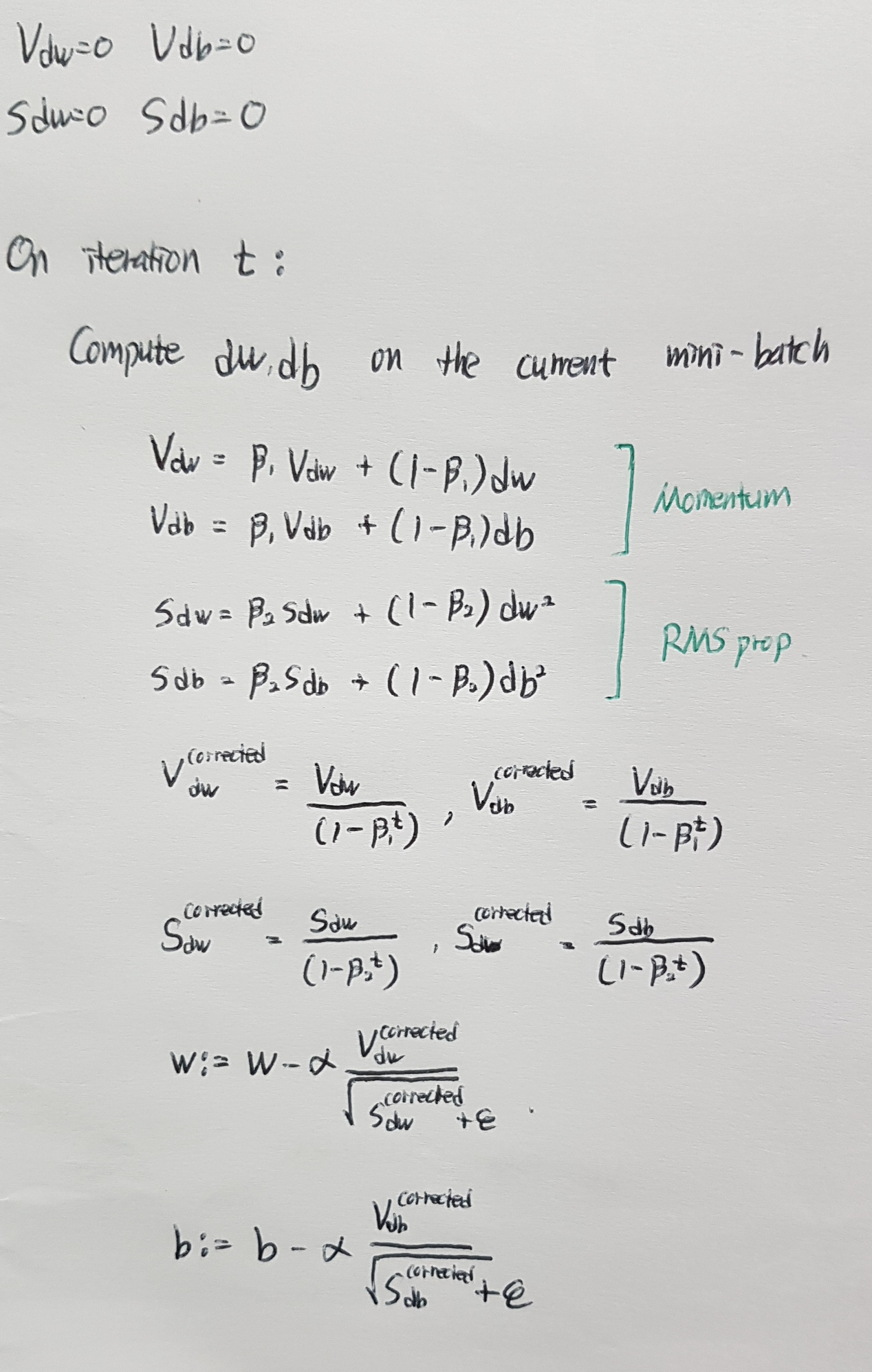

Adam stands for Adaptive Moment Estimation.

The Adam optimization algorithm is basically taking Momentum and RMSprop and putting them together.

MAIN

The algorithm has a number of hyper parameters.

- α : needs to be tune

- β1 : default is 0.9 (this is computing the moving weighted average of dw and db)

- β2 : default is 0.999 (this is computing the moving weighted average of dw^2 and db^2)

- ε : default 10^-8

CONCLUSION

반응형